📈Your Team’s Performance

How to maximize your team’s performance with DORA Metrics.

Hey there 👋🏼

In today’s email:

Measure your tech team’s performance: Enter DORA, the explorer!

Review your notes: Why are you taking notes again?

Cool stuff on the web: The best stuff on the web.

Post of the week: 5-star on social media.

MEASURE YOUR TECH TEAM’S PERFORMANCE.

One thing that we, as leaders, look for is for a team to have a low attrition rate to gain stability and consequently boost performance.

Quickly you’ll realize that measuring performance is not trivial, but it’s not impossible either.

You can start by analysing how many tickets the team closes, how many deploys it makes, and even how many merge (pull) requests it reviews. You’ll see a lot of noise in these metrics, and the results do not always give you a clear and accurate picture of your team's performance.

So what else can we measure?

Enter DORA!

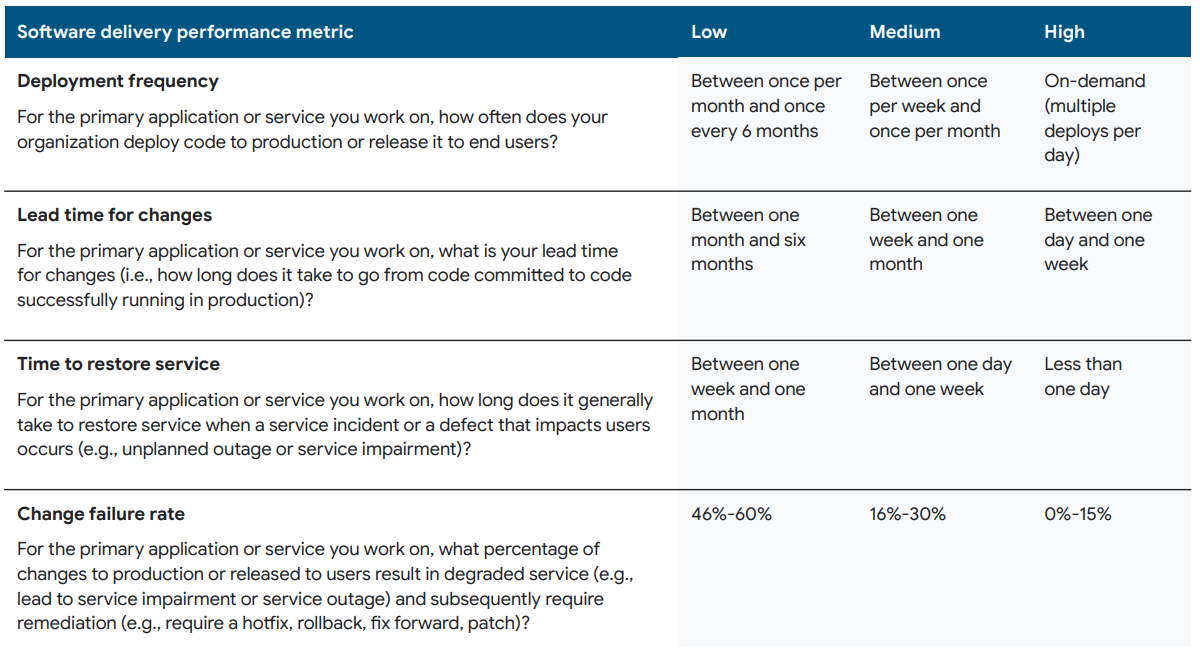

DORA metrics are DevOps Research and Assessment, measuring a tech team's performance and efficiency. You analyze 4 metrics:

Lead Time for Changes (LT) - How long it takes your team to take a piece of code from commit to production

Deployment Frequency (DF) - How often your team successfully releases code into production

Change Failure Rate (CFR) - The rate of your deploys that caused failures in production

Mean Time to Recovery (MTTR) - How long it takes your team to recover from a failure in production

Google created a matrix to, very generally, classify a team’s level of performance. In the beginning, a team could be positioned in four levels: low, medium, high and elite performers, but since 2018 this has been revisited and how a team is classified into one of three categories: low, medium and high.

While the metrics above measure delivery performance, Google added a fifth metric in 2021 to measure operational performance: Reliability. This allows teams to measure more than just the availability of their service. They should also focus on latency, performance and scalability.

So I would suggest you start creating a matrix and review your team’s performance in two vectors:

If your team only works within one context/service measure for that context.

If your team works with several contexts/services, measure each, so you get a clear picture of your team and how each context classifies. This gives you more opportunities for specific improvement.

If you want to read more about tech team metrics, how to measure performance and improve the way your engineers work, here are some key links:

REVIEW YOUR NOTES

Why are you really taking notes?

This week I got annoyed (again) at the amount of inaction from my end regarding the notes I take during meetings, quick calls, or things that come into my mind.

I started questioning why I was taking notes, the quality of my notes and why I was keeping them in the first place.

The conclusion is that 75% of the notes I had were… temporary.

I reviewed the notes I had since January 1st, and this was the categorization I found:

Drafts: I use this a lot. Most of my notes are drafts. When I’m going to write a long message, a performance review, etc., these are temporary notes that lose their purpose when I send them to their final destination.

Meeting Notes: I’m terrible at taking good meeting notes, at least good enough notes that will be useful in the future. I already created actions on my end to improve my meeting notes skills. Meeting Notes are meant to stick around for future reference.

Research: These are my valuable notes. Links, summaries, etc., about topics (tech and not tech) that make my life easier. These are my golden notes. They’re meant to evolve. This is where I think the Zettlekasten method can and should be used.

Misc Notes: If drafts are temporary, these are proper throwaways. Supermarket lists, quick dribbles, etc. These are sometimes promoted to another category but are at the lowest level of importance.

Most of my notes were in the drafts section.

Stopping and categorizing my notes, in a way, relaxed me and helped me realize where I needed to devote my energy.

For a while, I’ve been doing other things like a Leadership Journal where I write down key leadership actions, encounters, and lessons I learn daily and keep my content creation in a separate thread.

Let me launch the challenge, then.

Evaluate ALL the notes you wrote since the start of the year.

In what categories would you place them? And for how long should I store them?

Does this change anything in the way you look at your notes?

COOL STUFF ON THE WEB

🐦 Stop, look at the image below and be amazed at the Notionverse

🎧 Developing Leadership: The Recession and Engineering Leadership in 2023

🧩 Real-world Engineering Challenges #8: Breaking up a Monolith

Hi Luis :)

Your newsletter is really helpful. Thank you :)

About DORA metrics, do you think Jellyfish is a powerful tool to use in order to measure these metrics?

Thanks :)